Why test in production?

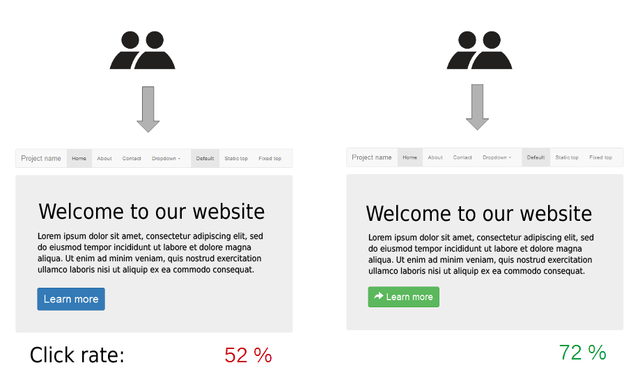

A Machine Learning lifecycle starts with experiments, moves on to building training pipelines and finally ends with deployment. So, are you done after deployment? I wish life was that simple. Machine Learning systems differ primarily from traditional software and/or ETL systems because they depend on data. Distribution shift is commonplace in the ML world, thus making them hard to detect and account for. This needs continuous updation of models. But, deploying updated models pose an inherent risk i.e. no feedback from user experience. Although you may perform exhaustive model evaluation before deploying the same. Yet, degradation of models is common due to dynamic nature of the world. Moreover, evaluation methods don’t reveal anything about the causal relationships between user experience and the new rollouts. This necessitates Online Experimentation. Hence, after deployment, it becomes imperative to test the rollouts continuously. We use Test in production to achieve this. One of the most famous strategies of testing models in production is A/B testing, enabled by Blue/Green deployment. And, if you don’t want to risk any user experience fallouts, you may use Silent Deployments too.

Here are the excerpts on SIlent Deployment and Blue Green Deployment from our article on Machine Learning Deployment Strategies.

Silent deployment

This mode deploys a new model, keeping the old one intact. Both the versions run in parallel. However, the user isn’t exposed to the new model immediately. We log the predictions made by it to identify any bugs before switching the users to the new model. The advantage of this pattern is that we get sufficient time to evaluate the new model. On the downside, it consumes a lot of resources since every datapoint/feature set is passed to two models.

A variant of silent deployment is the shadow mode. It means that the ML model shadows the human operator for some time before using it for partial/full automation.

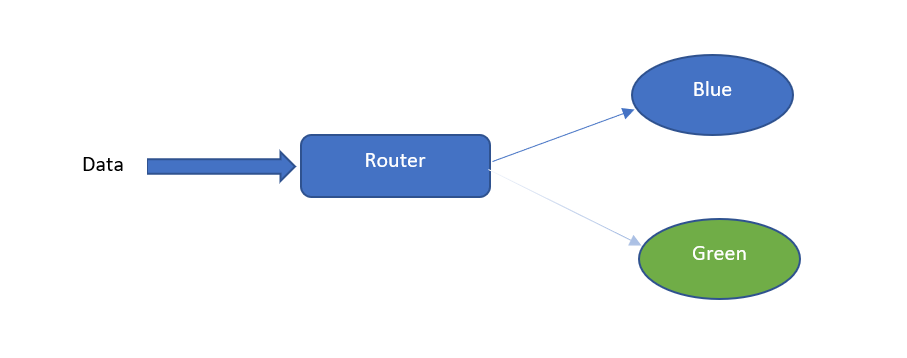

Blue-Green Deployment

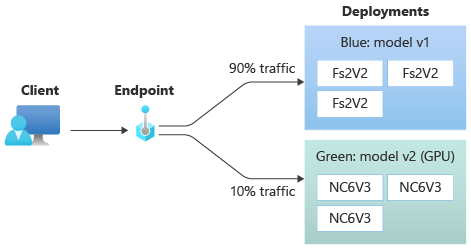

In this strategy, we maintain two nearly identical production environments. The current environment is a blue environment, while we call the new candidate environment the green environment.

The traffic is gradually routed from the blue to the green environment. This ensures that downtime to users is minimum. It is very easy to roll back with this deployment strategy, as it is only a router switch. Read more on blue-green deployment here.

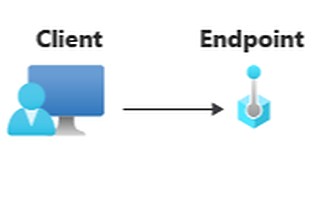

Test in Production with Azure Machine Learning Managed Online Endpoints

A question may arise as to how to implement concept of A/B testing with techniques like Shadow Deployment or Blue Green Deployment? Fortunately, we have cloud services like Azure ML enabling us for MLOps. Traditionally, Model Deployment in Azure Machine Learning was enabled by services like Azure Container Instances or Azure Kubernetes Services. With these services, maintaining the infrastructure was challenging enough, let alone setup advanced patterns like Test in production. However, with the introduction of Managed Online Endpoints, these possibilities are now a reality.

At this point we recommend our readers to read our tutorial on Azure ML Managed Online Endpoints. Nonetheless, what changes with Managed Online Endpoints? With Infrastructure management abstracted out, ML Engineers can focus on setting up of advanced designs for A/B tests. Let’s take a look.

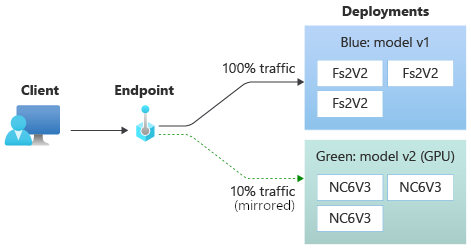

Silent Deployment with Azure ML Managed Online Endpoints

This can be implemented in Azure ML using the feature of mirrored traffic. In this mode, the candidate or challenger model (the new/updated model) is deployed alongside the champion model(the existing model). However, a percentage of live traffic is mirrored and sent to the challenger without exposing it to the end users.

Assuming that the challenger is in place, below is the cli command to setup the same:

az ml online-endpoint update --name $ENDPOINT_NAME --mirror-traffic "green=10"

Blue-Green Deployment with Azure ML Managed Online Endpoints

This is the more traditional blue-green approach, employed for A/B testing. Here, a fraction of live traffic goes through the challenger, to collect user feedback.

Assuming that the challenger is live, below is the cli command to setup the same:

az ml online-endpoint update --name $ENDPOINT_NAME --traffic "blue=90 green=10"

Conclusion

This is a gentle conceptual introduction to test in production for ML models in Azure ML with Online Endpoints. We do not guarantee it’s completeness or accuracy. For details, read the official Microsoft Documentation in Safe rollout for online endpoints.