Home Posts tagged managed online endpoint

Tag: azure machine learning, managed online endpoint

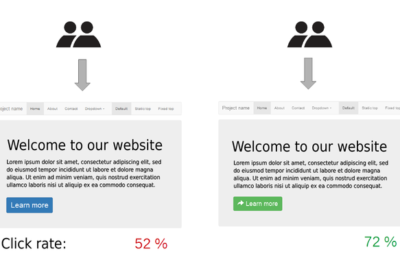

Test in Production with Azure ML Managed Online Endpoints

Prasad KulkarniSep 20, 2022

Why test in production? A Machine Learning lifecycle starts with experiments, moves on to building training pipelines and finally ends with deployment. So, are you done...

Batch Inferencing in Azure ML using Managed Online Endpoints

Prasad KulkarniJul 26, 2022

Batch Inferencing with Azure ML is a complex affair. It entails creating a compute cluster, creating a parallel run step and running the batch inferencing pipeline. With...

Secure Azure ML Managed Online Endpoints with Network Isolation

Prasad KulkarniJun 16, 2022

In one of our previous articles, we introduced Managed Online Endpoints in Azure Machine Learning. We deployed that endpoint on a public Azure Machine Learning. However,...