Since 2012, Deep Learning has exploded and has become synonymous with the term Artificial Intelligence and fairly so. Deep Neural Networks achieve tasks with remarkable performances, once beyond the purview of Machine Learning. Be it Computer Vision or Natural Language Processing, Deep Learning is making strides all over the place. Architectures like MLP, CNN and RNN have been ruling the roost.

Moreover, these algorithms look almost magical. Taking a step back in traditional Machine Learning, designing the right features was the key to good performance. This was especially true for linear models like Logistic Regression, SVM, etc. With ensembles, the models can learn complex patterns without too many features transform.

However, with Deep Neural Networks or Multi-Layer Perceptrons, they could learn complex patterns with no feature engineering at all. In this article, we show that while Neural Networks can learn features and fit complex decision boundaries, appropriate feature transforms help a great deal in optimizing the training process.

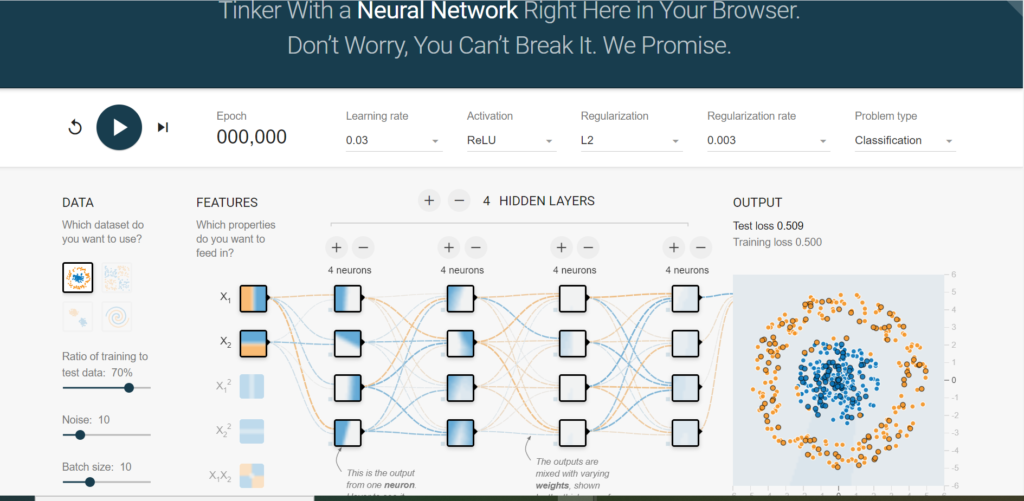

But first things first. Let’s first introduce you to the TensorFlow playground. It is a fun and interactive application to learn Neural Networks. You can check it here.

Setup

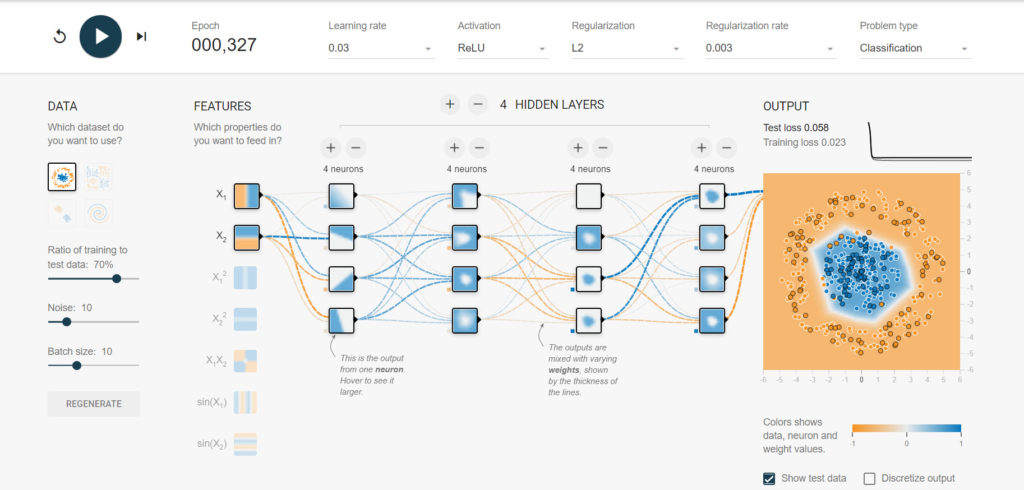

The playground is a simple browser-based application as shown below:

Now, for this article, we will restrict ourselves to the architecture shown above. The dataset is concentric circles of data points. For simulating real-world datasets, we are introducing 10 per cent noise. The darker points represent the test data.

We will now see two cases viz. Deep Learning with Feature Engineering and without Feature Engineering.

Deep Neural Networks Without Feature Engineering

Now, primary exploratory analysis reveals that we have a circular decision boundary. Yet, in the first case, we will pass plain features i.e X1 and X2. When we run the same, we will observe the following results.

You can see that we have the decision boundary as a polygon. However, the difference between train and test loss shows us it is over-fitting to the training data.

Deep Neural Networks With Feature Engineering

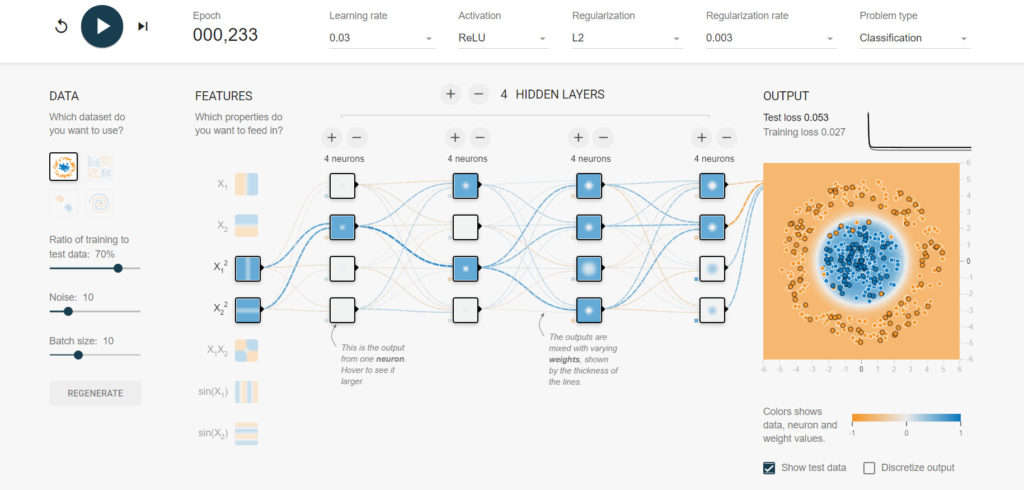

Now, we know that equation of a circle is x^2 + y^2 = c. Hence, in this case, to have a circular decision boundary, we will engineer the input features to X1^2 and X2^2.

This feature transform gives us the following results:

The first thing we can observe is the shape of the decision boundary. It is as per our expectation, i.e. circular. However, we can see that the algorithm converges faster, i.e. in 233 epochs, as opposed to 327 in the previous case. Lastly, gauging the difference between the train and test loss, we can conclude that there is a lesser overfit in this case!

Conclusion

Firstly, we encourage you to play with this fantastic tool. Secondly, Deep Neural Networks are great at identifying complex patterns. However, it is important to note that it helps the model to learn faster and better if we provide it with well-designed features.

Also read: Knowing when to consider Machine Learning