A mathematical model is a representative of a real-world phenomenon. We seek to understand the world and take action accordingly using models. This includes everything from Space Science to the business world. However, with the advent of Big Data, and the growth of Data Science, modeling has taken a centre stage. Learning from data is gaining traction. AI/ML algorithms, including deep learning, are the town’s talk. Hence, for the beginner, It seems that a model is nothing but an AI/ML algorithm. But, that is not true. Some of the wiser folks know that there is Statistical Modeling too. But, within the same, there are various approaches or mindsets. Let’s take a look.

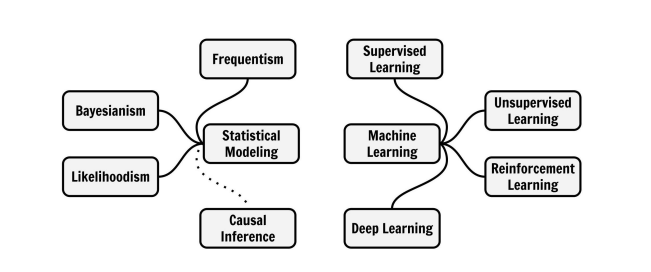

You may have gauged by now that there are two major mindsets/approaches to modeling the data:

- Statistical Modeling

- Machine Learning Modeling

Statistical Modeling, the traditional, age-old discipline, consists of various techniques like Frequentist Inference, Bayesian Inference, Likelihoodism and Causal Inference. On the other hand, we have the more recent Machine Learning paradigm. It encompasses Supervised Learning, Unsupervised Learning, Deep Learning, and Reinforcement Learning. Here is a summary of all the modeling techniques/mindsets:

Statistical Modeling

Statistical Modeling has been in practice for a few centuries. It owes it’s development to stalwarts like Gauss, Karl Pearson, RA Fisher etc. It has been a bedrock of much of the analysis before the pre-computer era. However, it has been enhanced by the advent of superfast computers.

Statistical Modeling relies on elaborate mathematical tools and techniques developed over centuries. It relies on distributions, assumptions etc. It entails parameter estimation and conclusions about the real world.

But, there are different mindsets/approaches in statistical modeling. Two of the major approaches are Frequentist and Bayesian mindset.

The Frequentist Mindset is about estimating the true parameters of a model. It is based on the frequentist interpretation of probability i.e. relative frequency of an event in a long-running trial. It starts with a Hypothesis and then tests whether the data supports the hypothesis.

On the other hand, the Bayesian Mindset is derived from the subjective interpretation of probability. Here, the modeler assumes prior values of the parameters and updates them when new information arrives. This is enabled by the famous Bayes theorem.

There is another viewpoint or modeling mindset called Likelihoodism, which is a more purist view. It revolves around the concept of a likelihood function which indicates the likely values of the parameters given the data. It links the likelihood function to one of the standard distributions.

Lastly, none of the above mindsets accounts for the Cause and Effect Relationship. This brings us to the Causal Inference Mindset. Traditionally, Causal relationships have been established by experimental design viz. the Randomized Controlled Trials. However, in many cases, it is infeasible to perform experiments. This needs an approach to study causal relationships in observational data. While Causal Inference is close to traditional statistics, it also finds utterance in Machine Learning, which is a nice segue into the next major class of Modeling Mindset i.e. Machine Learning Modeling.

Machine Learning Modeling

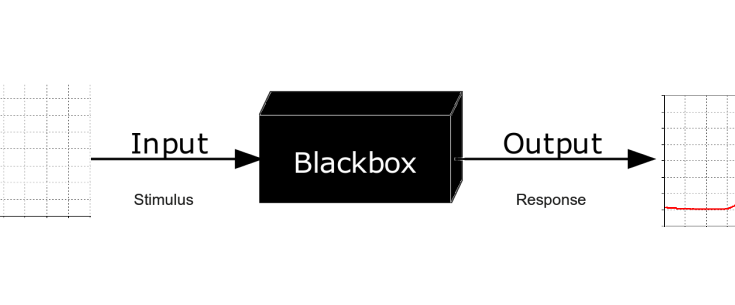

Statistical Modeling predates the computer era. But, with the explosion of computing power and available data, Algorithms have taken a centre stage in the modeling world. The Machine Learning mindset starts with computer algorithms, unlike the Statistical mindset, in which the computer is just another tool.

One of the simplest and most widely used paradigms in Machine Learning Modeling is the Supervised Learning Mindset. It forms the bedrock of most of the predictive analytics tasks. A problem needs to be converted into a supervised learning task by creating labels and features. The key assumption here is that the modeler knows the value to be predicted.

However, if the modeler does not know what is he/she looking for. They are looking to find patterns in data. This brings us to the Unsupervised Learning Mindset.

Next, comes the Deep Learning Mindset. It is different from the supervised learning mindset in approach. It consists of deep neural networks and complex architectures to subsume a wide variety of data and feature engineering efforts. Having said that, Deep Neural Networks are used extensively in Unsupervised Learning and Reinforcement Learning settings too.

Lastly, we have the Reinforcement Learning Mindset. None of the previous mindsets interacts with the environment in which the data is generated. In this paradigm, the model acts in a dynamic world and is driven by rewards for attaining a goal. Unlike the above three paradigms, Reinforcement learning adapts to the changing world.

Which is the best mindset?

It is humanly impossible to be a master of all mindsets/paradigms. Every Modeler has his/her strengths and weaknesses. However, it is ideal for a modeler not to stick to one mindset exclusively. The Modeler should be flexible enough to adopt other mindsets when one doesn’t yield satisfactory results.

P.S.

This article is inspired by the recent book Modeling Mindsets by Christoph Molnar. You can buy the book Modeling Mindsets here.