Classification is one of the most widely used supervised Machine Learning technique. In classification, the target variable takes a limited set of values. For this discussion, let us restrict ourselves to the binary classification scenario. In binary classification, the target variable takes only 2 distinct values, for instance, either 0/1, True/False.

The simplest metric to gauge a classification model’s performance is Accuracy. It is the percentage of correctly classified points. However, there is another definition of accuracy. However, before that let’s introduce a few terms:

- True Positive(TP): The number of points where both predicted and actual values are positive.

- False Positive(FP): The number of points where predicted value is positive and actual values are negative.

- True Negative(TN): The number of points where both predicted and actual values are negative.

- False Negative(FN): The number of points where predicted value is negative and actual values are positive.

In these terms, the definition of Accuracy is (TP+TN)/(TP+FP+TN+FN) i.e correctly classified (TP and TN) by the total number of points.

Skewed classes

Having said that, let’s go through a scenario where accuracy falls on its head. The datasets we find the real world are rarely balanced. However, in some scenarios, the class distribution is highly skewed. A classic example of this could be found in cancer research. Let us assume that we want to determine if a tumour is cancerous or not. In cancer research, it is relatively difficult to find instances of a tumour being malignant. Let’s assume that the distribution of classes is 95:5 i.e 95 non-malignant ones while 5 are malignant.

If we use accuracy as a metric, in this case, we might end up having a high accuracy even though the model might be useless. To elaborate, in case of the above scenario, suppose that we can predict that 85 out of 95 cells are non-cancerous for the non-cancerous ones, while 0 out of 5 cancerous ones have been predicted rightly. Even with such a practically useless model, we have an accuracy of 85 %. This scenario is called a case of skewed classes in ML. To overcome the limitations of accuracy, we have two metrics viz. precision and recall.

Also read: Knowing when to consider Machine Learning

Precision and Recall

Firstly, let’s define the expression for both the quantities.

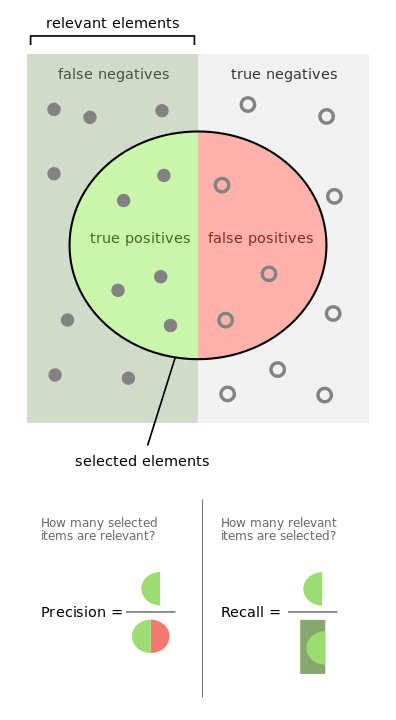

Precision = TP/(TP+FP)

Recall = TP/(TP + FN)

Before we go further, we should be clear that these two quantities revolve around the positive class, which is the class under consideration. This is a matter of convention. Now, let’s try to understand the logic behind the formulas.

The word precision comes from the English word precise. It means how precise the model is. It comes from a key question that out of the points classified as positive, how many were actually positive? The term TP+FP i.e. the denominator represents the total number of points classified as positive. Moreover, the numerator i.e. True positive gives away points which were classified correctly as positive. Hence, Precision is the ratio of points correctly classified positive class by the total number of points classified as positive.

Now, coming to recall, it originates from the verb of remembering. It is analogous to the ability of the human mind to retrieve correct information. With machine learning, it could be thought of as the model’s ability to recall the positive class appropriately. To elaborate, it is the number of correctly classified positive points among all the positive points. It is like asking that out of all the positive points, how many were correctly classified? The term TP + FN is the total number of positive points and recall is the ratio of correctly classified points to the number of points belonging to that class, actually. To summarise, the below image might help.

F1 Score: Geometric mean of Precision and Recall

One might wonder why do we need two metrics to judge a model. We do have a metric called F1 score, which combines both precision and recall into one. It is the geometric mean of the two. However, the problem with F1 score is that it is not interpretable i.e. one cannot dig deeper into the behaviour of the model on each class using precision and recall matrix.

Conclusion

Hope you find this article useful and intuitive. We have tried to present it to the best of our knowledge. However, we do not claim the accuracy and completeness of this article.

P.C. Precision and recall