Functional requirements of a Machine Learning Infrastructure

We have been discussing Data Science, Machine Learning System Design and MLOps for a while in our articles. We have touched about various aspects of the above. For instance, we touched upon ML Training Pipelines and Model Deployment using Azure ML Managed Online Endpoints. However, in order to support the aforementioned, an effective Machine Learning Infrastructure needs to be built. The infrastructure should be generic enough to support most of the use cases, while offering flexibilities to Data Scientists to use the tools of their choice.

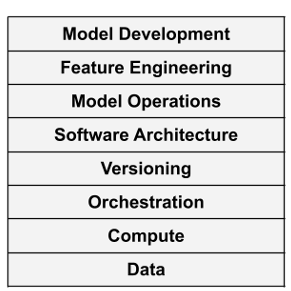

However, before diving into what should comprise a Machine Learning Infrastructure, we need to first understand the desired functionalities from the same. The following components comprise the functional requirements of a Machine Learning Infrastructure:

Data

Data is the foundation of any Data Science effort. A versatile data storage, along with robust data management practices form the backbone of any data science/machine learning effort.

Compute

Machine Learning, end of the day, is a massive computational task. To build models on the data, a powerful compute is needed.

Orchestration

Different stages of Machine Learning life cycles are interconnected. For instance, Training/Retraining and Deployment/Inference workflows are interconnected. These workflows need to be orchestrated appropriately.

Versioning

In traditional Software Engineering, Versioning includes Code and the Dependencies. However, with Machine Learning, Versioning increases in complexity. Apart from the aforementioned artifacts, ML workflows need to track Experiments, Models, Data.

Software Architecture

Data Science/Machine Learning products are a piece of Software too. Hence, they need to be supported with a robust Software Architecture. This includes flexible and scalable training, serving and security infrastructure.

Model Operations

Model operations includes model deployment, serving and maintenance. It includes setting up a model registry, model inference strategies. It is the ability to manage and serve multiple models simultaneously to various applications and stakeholders in a scalable and reliable ways.

Feature Engineering

Feature Engineering is the key step of any Machine Learning lifecycle. Moreover, it’s a key requirement (and challenge) to maintain feature consistency between training and serving layer. Emerging technologies like Feature Stores help ML teams achieve this.

Model Development

This is the central piece of ML Lifecycle. Although a small part of it, this is the key, since it is a layer that adds the intelligence to the Data Science application. It needs a platform that can support the various ML frameworks, architectures. Alongside, it needs to ensure reproducibility, Hyperparameter tuning, etc.

Elements of a Machine Learning Infrastructure

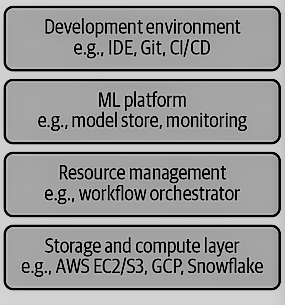

Now, having discussed the functional requirements of a Machine Learning Infrastructure, let’s go through its technical requirements/elements. The below image summarizes the same:

The basic layer of any Machine Learning Infrastructure is the Storage and Compute Layer. This covers the first two layers of the functional requirements viz. Data and Compute. In Microsoft Azure, we have Azure Storage Account, Azure Data Lake for storage options. As far as compute options are concerned, we have Azure Machine Learning Compute options like Compute Instances/Compute Clusters. Not to forget the Azure Databricks!

However, to use this layer effectively, Data Scientists and Machine Learning Engineers need a secure and robust Development Environment. This calls for setting up IDE’s and repositories. This enables Data Scientists for Model Development, Feature Engineering and Versioning (Code). In Microsoft Azure, again there is databricks, azure ml compute instance or vscode for development. And then, we have Azure DevOps for versioning and CI/CD.

Furthermore, Data Scientists perform a ton of experiments. To do so, they need specialized environments for Experiment Tracking, Model Management and Monitoring. Thus, we need a versatile ML Platform which can take care of Model Operations, System Design and Software architecture. Both Azure Machine Learning and Azure Databricks are phenomenal ML Platforms.

Lastly, to bind the above together, Resource Management is key. This is another name for Orchestration. This makes way for Azure Pipelines.

Conclusion

No infrastructure can be generic enough for Machine Learning. Especially, if you have use cases like Self Driving cars. However, a team could build it generic enough to support most of the AI/ML driven use cases. These are some first principles of an ML infrastructure and in no way exhaustive.

Featured Image Credit: Wikipedia.