In the changing landscape of technology, new tools emerge. Azure Databricks has been a prominent option for end-to-end analytics in the Microsoft Azure stack. In 2019, Microsoft introduced Azure Synapse. With it came Azure Synapse Analytics. We wrote about the philosophy behind Synapse back then. Here is our article on the same: Azure Synapse Analytics: Azure SQL Data Warehouse revamped. So what are the nuances that one needs care for migrating from Azure Databricks to Azure Synapse? We covered one of the aspects of moving from Azure Data Factory to Azure Synapse Integration here: Migrating from Azure Data Factory to Azure Synapse Integration.

Firstly, this is not a comparison article between Azure Databricks and Azure Synapse Analytics. In fact, we are not even touching upon the performance or cost aspects. We assume that you have decided to migrate from Azure Databricks to Azure Synapse Analytics and there is no turning back. So what are the changes you need to make your spark code?

Note that Azure Synapse is built on top of open-source Spark as opposed to Databricks that has built some additional modules. This forms the basis of three important features of Databricks that need an alternative in the synapse:

1. Replacing Azure Key vault backed Databricks secret scope.

Writing secure code is a key aspect any developer needs to know. At no place, the sensitive information like passwords can be exposed. Azure Key vault is a Microsoft Azure service that can store sensitive data in form of secrets. To access these secrets, Azure Databricks has a feature called Azure Key vault backed secret scope. To know more about how to set it up for your Azure Databricks workspace, read this tutorial by Microsoft.

Coming to Synapse, it does not have the concept of secret scopes. So, how do we access secrets? Turns out that there is a way by using a module named TokenLibrary. However, the following steps need to be performed beforehand:

- Create a linked service to the Azure Key Vault in Azure Synapse Analytics.

- The Synapse workspace has a managed identity like all the other Azure services. Grant the GET permission to it on the key-vault.

- Use the getSecret() method to access the requisite key-vault secret.

Following is the reference code snippet:

import sys

from pyspark.sql import SparkSession

sc = SparkSession.builder.getOrCreate()

token_library = sc._jvm.com.microsoft.azure.synapse.tokenlibrary.TokenLibrary

connection_string = token_library.getSecret("<AZURE KEY VAULT NAME>", "<SECRET KEY>", "<LINKED SERVICE NAME>")

print(connection_string)

Reference article here.

2. Replacing dbutils in the Azure Synapse Analytics.

As aforementioned, Databricks has added certain flavours on top of open-source spark. One of the very useful features that Databricks has built is dbutils, also called Databricks Utilities. It comprises functions to manage file systems, notebooks, secrets, etc. This is especially very useful in file system tasks like copy, remove, etc. To know more, refer to this link.

On the other hand, Azure has its own version of dbutils called Microsoft Spark Utilities. To dive deep into it, refer to this link.

3. No Databricks Magic commands available in the Azure Synapse Analytics.

Finally, code reusability is one of the most important Software Engineering paradigms. Imagine that you want to re-use some commonly occurring functions across different notebooks. In Databricks this can be achieved easily using magic commands like %run. Alternately, you can also use dbutils to run notebooks. This helps us create notebook workflows easily.

Unfortunately, Azure Synapse Analytics does not have the same feature. This creates code redundancy across notebooks. Hopefully, Microsoft will add this feature in the future.

Update: The %run feature exists in Synapse notebook (although in preview). Thanks to one of our readers who pointed it out. Here is the link for the same: Magic commands.

Conclusion

Hope you find this article useful. We do not guarantee its completeness or accuracy. However, this article will evolve since both platforms are evolving rapidly.

For something written so recently, odd to say Synapse doesn’t have the %run feature

We have edited the same now. Thanks for bringing this up.

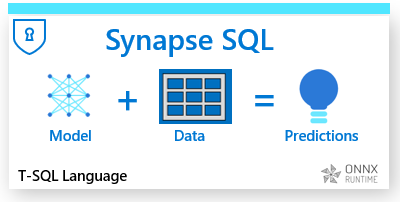

Microsoft Spark Utilities could be used to run notebooks. With this, you don’t have to create the same code in different notebooks in Azure Synapse Analytics.

Yes. We made that Modification.

Any luck replacing Auto Loader?