Why Data and Model Validation?

A Machine Learning application is a piece of Software, the end of the day. Hence, all the maxims applicable to software engineering are also useful to Machine Learning Engineering. In SDLC, validation plays a key role. It increases the reliability and reproducibility of a piece of software, thus increasing operational efficiency. As I have said in one of my earlier posts, a Machine Learning Training Pipeline is like an ETL Pipeline. This thought is re-iterated by the authors of the book Reliable Machine Learning, where they say that the ML training pipeline is a special purpose ETL pipeline, which takes unprocessed data, applies ML algorithms to data, and produces Models as an output. Hence, all the necessary steps to validate ETL pipelines are applicable to ML pipelines too. In fact, it requires more validation than ETL pipelines. This brings us to Data and Model Validation.

Data Validation

A Machine Learning system is Garbage In-Garbage out. Hence, it becomes imperative for ML Engineers/ Data Scientists to validate the data that they are feeding into the ML System. Moreover, it is desirable that Data Validation is a part of the training pipeline. So what does Data Validation constitute?

The first step is to validate the schema of the input data. For the model quality to be optimal, all the required features need to be present in the training data. Hence, it is imperative for Data Scientists/ML engineers to store and document all the features required for training. Here is a simple example with Pandas Dataframe.

select_columns= ['A', 'B', 'C'....] column_flag=all(cols in dataframe.columns for cols in select_columns)

Next, comes missing values validation. Missing values/unclean data degrade ML model training significantly. Hence, it’s a good practice to check for missing values right in the beginning.

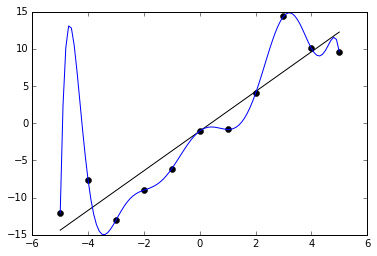

Another aspect of Data Validation is Data quality. It is necessary to validate the quality of the data. For instance, date-time columns should adhere to the appropriate format. Another example is Anomaly Detection.

There some interesting and useful packages for data validation like Great Expectations or Tensorflow Data Validation.

Model Validation

Along with input validation i.e. data validation, it is imperative for an ML Engineer to ingrain Output Validation into the training pipeline. In this case, it is called Model Validation. But why Model Validation?

In Machine Learning System Design, there are two workflows i.e. the training workflow and the deployment one. A training pipeline usually ends with Model Registration into the registry. The deployment workflow takes the model from the Model Registry. However, before that, the team should be confident of the quality of the model they have to deploy to the target environment. That’s where Model Validation comes in.

Model Validation entails basic sanity testing of predictions, performance evaluation on test and validation sets, fairness evaluation, interpretability etc. For instance, a basic sanity test could be performed in Azure ML as follows:

from azureml.core import Workspace,Model model_obj =Model(ws,registered_model) #load a registered model model_path = model_obj.download(exist_ok=True) model = joblib.load(model_path) categories = model.predict(data_df).tolist() # data_df comes from a sampe, file

However, more advanced evaluation paradigms like fairness evaluation, interpretability could build more confidence amongst the consumers of the Model.

Conclusion

MLOps is an emerging field, with new practices being proposed everyday. Hence, the tactics may change. But, the strategy remains constant i.e. more validation leads to enhanced trust and reliability. Lastly, this article is for information purposes. We do not claim any guarantees regarding it’s accuracy and completeness.

Note: Feature image has been borrowed from wikipedia.