Prologue

In the first part of this series i.e. Move Files with Azure Data Factory- Part I, we went through the approach and demonstration to move a single file from one blob location to another, using Azure Data Factory. As a part of it, we learnt about the two key activities of Azure Data Factory viz. the Copy Activity and Delete Activity.

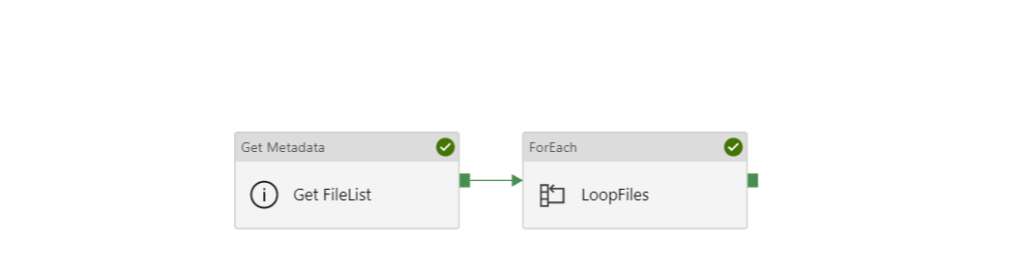

However, real-life scenarios aren’t that simplistic. Generally, we have multiple files in a folder which need to be processed and archived sequentially. In order to achieve that, we will introduce two new activities viz. Get Metadata activity and ForEach activity.

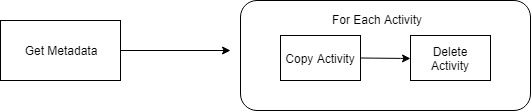

Pipeline overview

In order to move files in Azure Data Factory, we start with Copy activity and Delete activity. However, when we have multiple files in a folder, we need a looping agent/container. Fortunately, we have a For-Each activity in ADF, similar to that of SSIS, to achieve the looping function.

However, the for-each loop requires a list of objects (called as items) to loop over. These items are provided by a Get-Metadata activity. Here is a high-level block diagram of the pipeline we are going to build.

Get Metadata

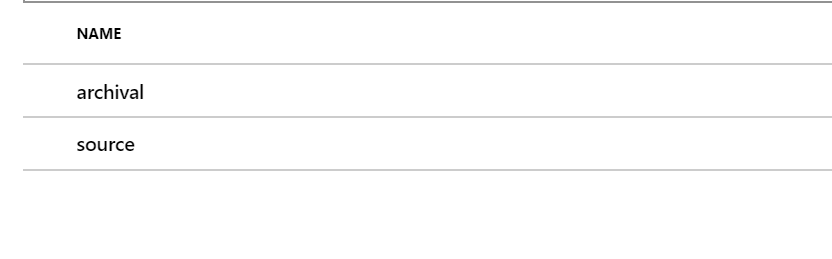

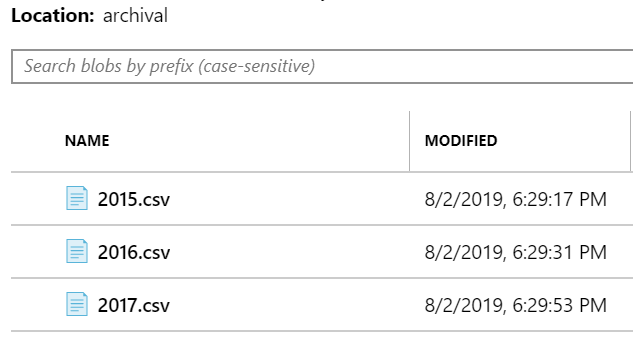

Let us set up the scenario first. We two blob containers viz. source and archival.

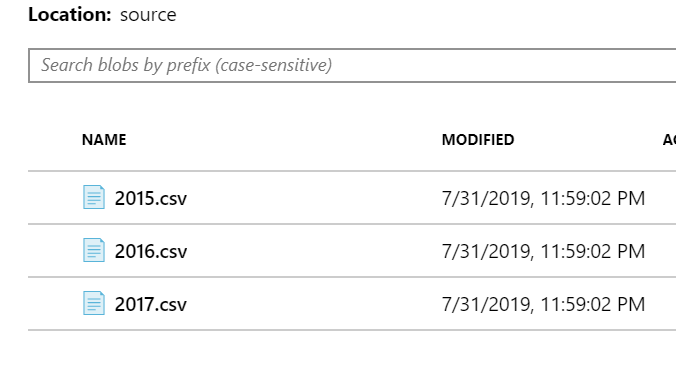

The source contains three files as shown below.

As mentioned in the pipeline overview, we will be using get metadata activity to retrieve the metadata of the source folder. Further, a part of this metadata will act as the list of objects called ‘items’ for the for each container.

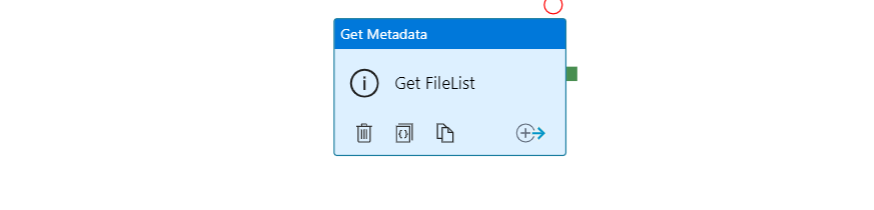

In a new pipeline named Move Files, we have a new Get Metadata activity named ‘Get FileList’.

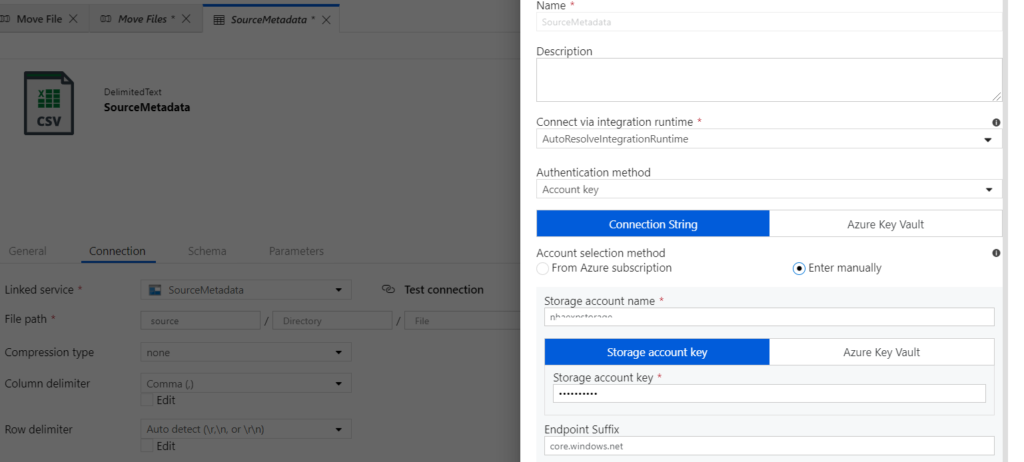

For this activity, we have created an independent connection named SourceMetadata pointing to the source folder, which contains all the files to be moved.

ChildItems

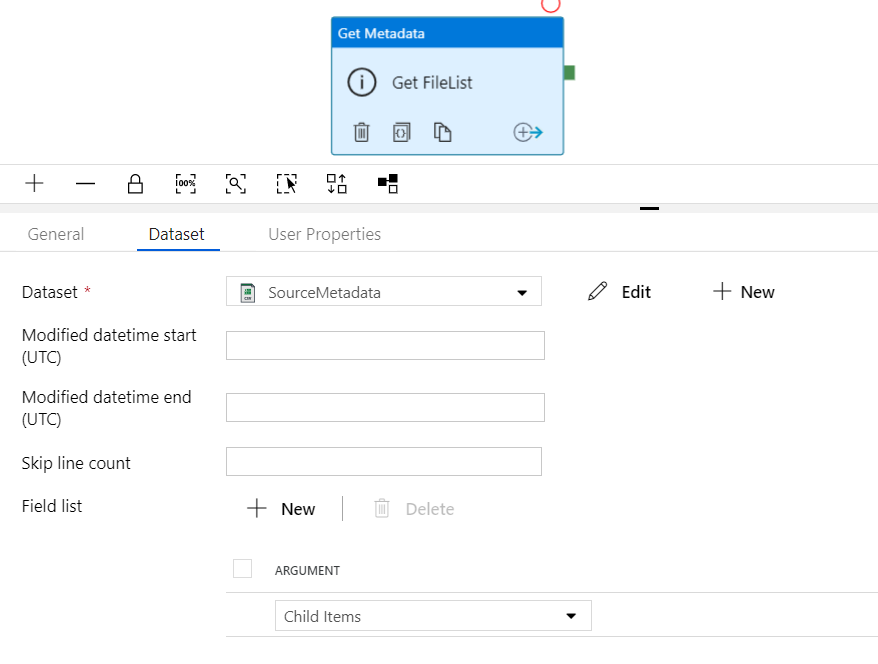

Once the connection is established and tested successfully, we need to configure the output of the Get Metadata activity. It is achieved with the Field list option in the Dataset. Click on New and select Child Items. This setting will ensure that we get the Child Items in the blob container i.e. the File List.

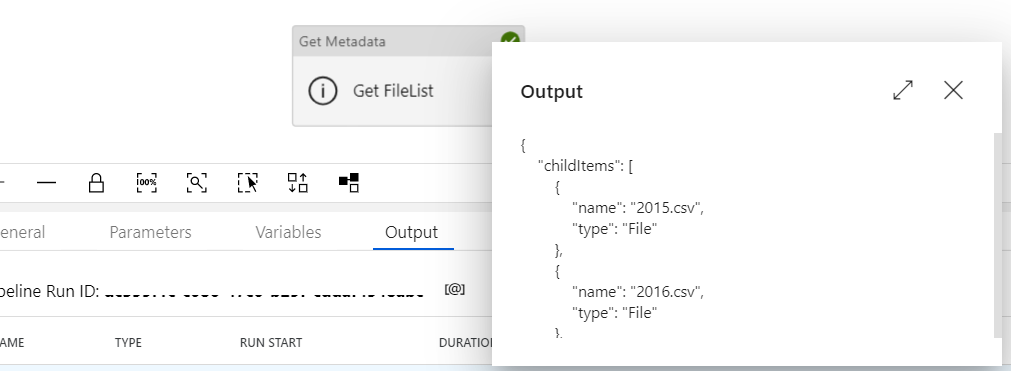

Let us run this pipeline and visualize the output of Get Metadata activity. We can see childItems in the output.

ForEach activity

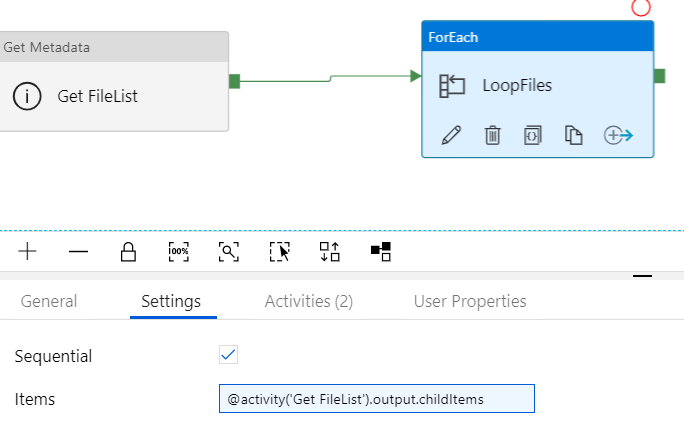

Now comes the heart of this article i.e. the ForEach activity. As discussed earlier, a ForEach activity requires a list called ‘Items’ to loop through them. These ‘Items’ are supplied by the preceding Get-Metadata activity. The configuration is depicted below.

Please note that you have to check the Sequential check-box in order to execute this loop in a sequential manner. Also, we use the output of the Get Metadata activity(Get FileList) as an input to the Foreach loop. Here is the expression for your reference.

@activity('Get FileList').output.childItems

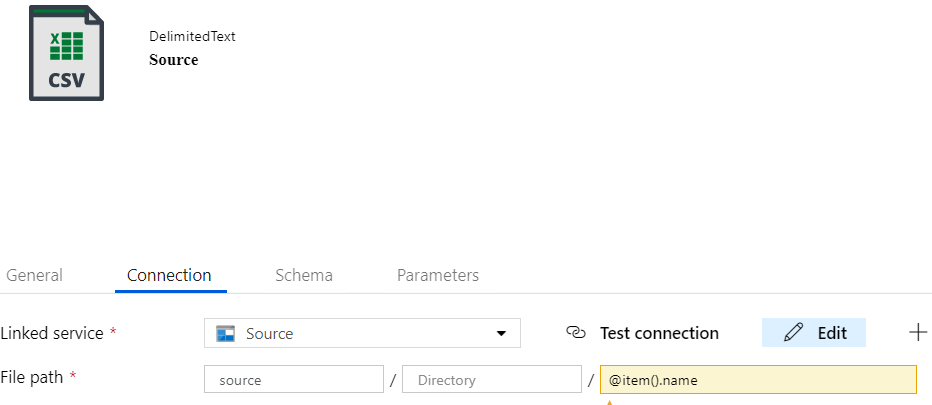

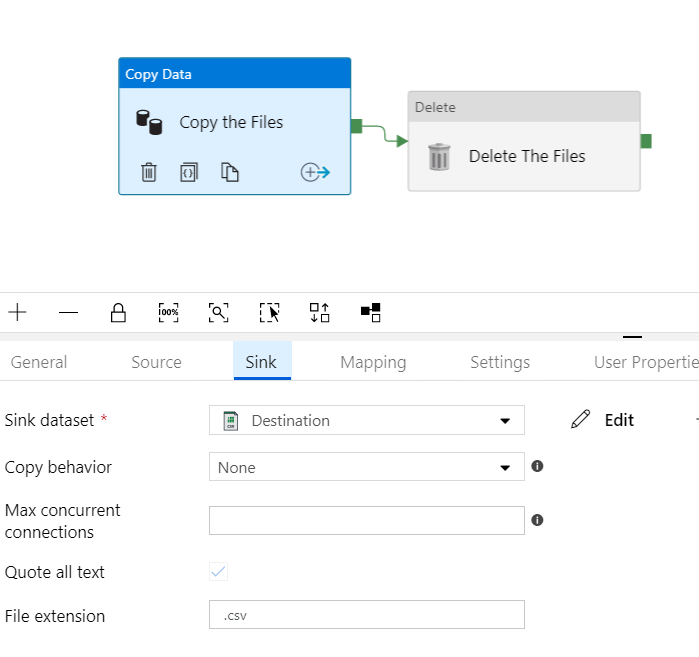

Furthermore, the activities with the ForEach need configuration as well. Double click on the ForEach container takes you to the inner activities. Here, we find the Copy activity and the Delete Activity to move the files. However, the filenames cannot be static as demonstrated in the first part of the article series. The below snapshot depicts the dynamic filenames.

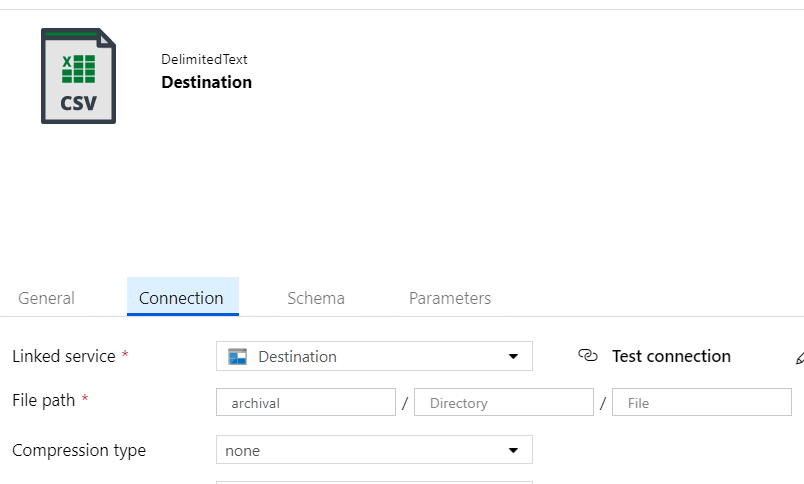

Nonetheless, the destination connection has two aspects. Firstly, set the file extension to .csv (matching with source files in general). Secondly, keep the filename blank in the File Path.

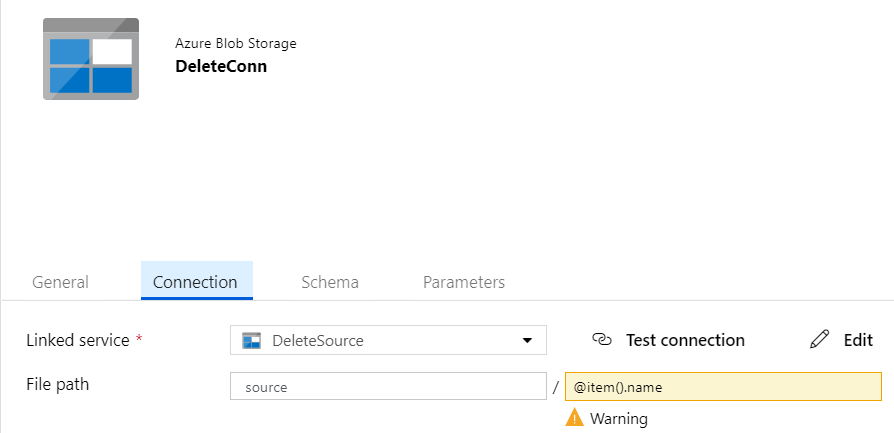

Lastly, configure the Delete activity similar to source connection i.e. with dynamic filenames.

Run the pipeline and see the results.

Source Before Move

Archival Before Move

Source After Move

Archival After Move

Conclusion

This isn’t an exhaustive scenario. We can have an empty source container. In that case, we need to send email notifications. We will explore that in the next article.

Disclaimer: The articles and code snippets on data4v are for general information purposes only. We make no representations or warranties of any kind, express or implied, about the completeness, accuracy, reliability, suitability or availability with respect to the website or the information, products, services, or related graphics contained on the website for any purpose.