Why Move Files?

A typical ETL process entails extracting the data, transforming it and loading the same in the destination. Firstly, we extract files in a container/folder. Furthermore, these files are processed to perform the transformations. Next, we load the transformed data in our destination. Lastly, we move the extracted files from the source container/folder to a different location reserved for archival.

In Microsoft SSIS, this was a straightforward task with the File System task. However, as the Data landscape changed, newer tools and technologies emerged. On-prem systems started moving to the cloud. Hence, with changing paradigms, Microsoft came up with a new cloud ETL offering named Azure Data Factory. However, ETL concepts and practices haven’t changed and will survive for a long time to come; the practice of archiving files is no exception to this.

Unfortunately, Azure Data Factory lacks a pre-built File System Task. Let us walk through the workaround to achieve the same.

Data Factory way

Moving files in Azure Data Factory is a two-step process.

- Copy the file from the extracted location to archival location.

- Delete the file from the extracted location.

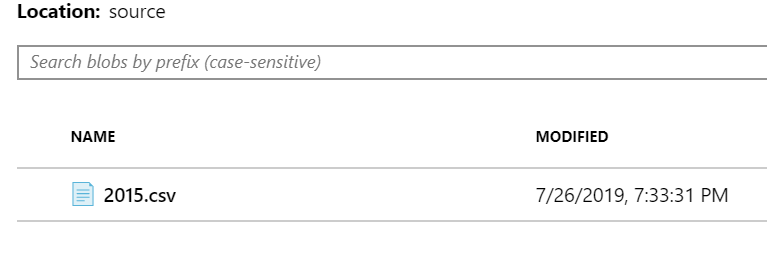

This is achieved by two activities in Azure Data Factory viz. the Copy activity and the Delete Activity. Let us see a demonstration. We will move a file from one Azure blob container to another. The below image shows two containers named the source and archival.

We will move a file from the source folder to the archival folder. Let us build the Data Factory to do so. Following are the two activities to be used for in the same:

Copy Activity

Copy activity is a central activity in the Azure Data Factory. It enables you to copy files/data from a defined source connection to a destination connection. You need to specify the source and sink connection/dataset in the copy activity.

Delete Activity

The copy activity is followed by the Delete Activity which helps you delete a file from a specified location. You need to specify the delete connection/dataset along with the location.

The Pipeline

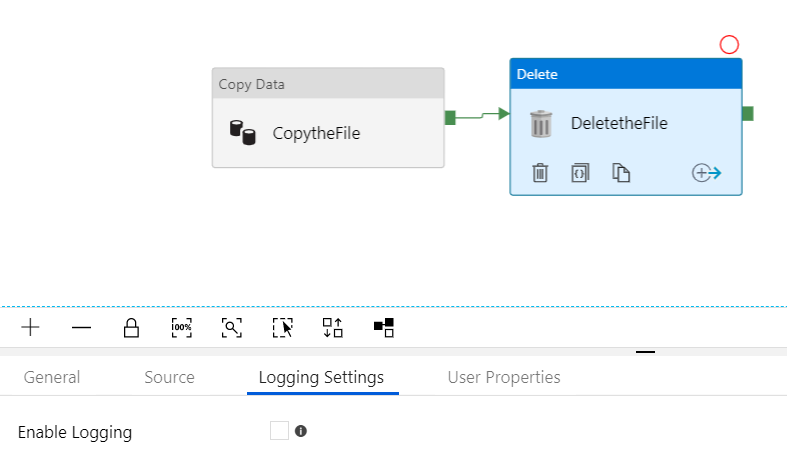

The below diagram shows the Pipeline to perform the intended task.

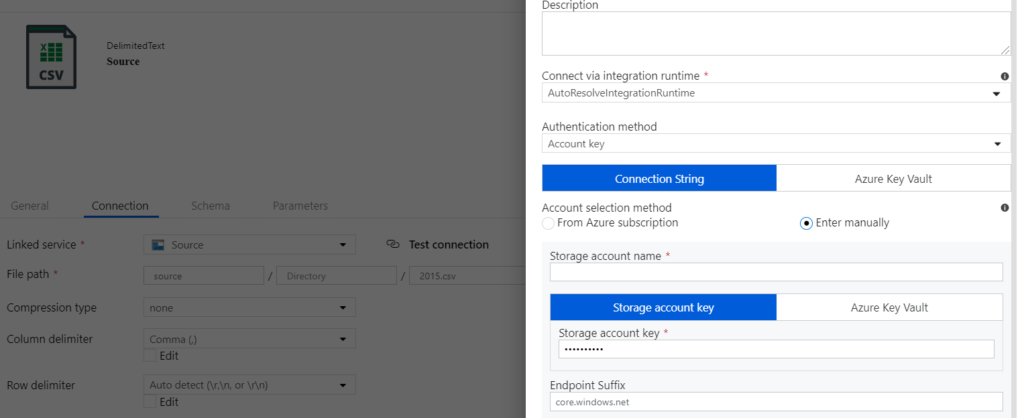

Copy activity Source Connection

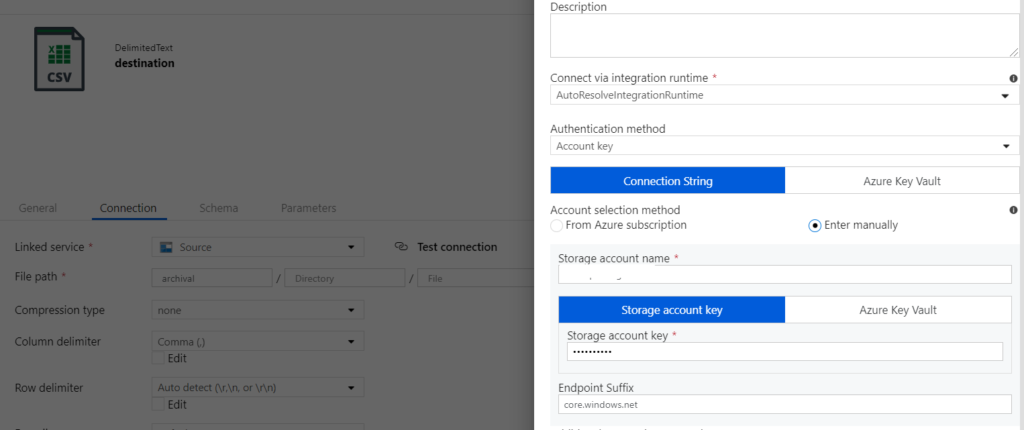

Copy activity Sink Connection

In the copy activity, the source connection/linked service is the blob storage location. We are using account key authentication in this demonstration to authenticate ADF to access the blob. However, you can also use the SAS URI, service principal or Managed Identity as well. The sink is another folder/container in the same blob. Next, the delete activity has linked service pointing to the source location. It is important to note that you need to enable/disable logging in the delete activity. If selected, this setting helps you choose a logging location as well.

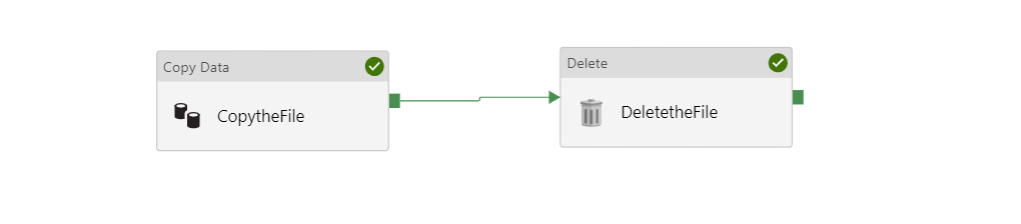

Now, let us see the results of the successful pipeline run.

Source Before Move

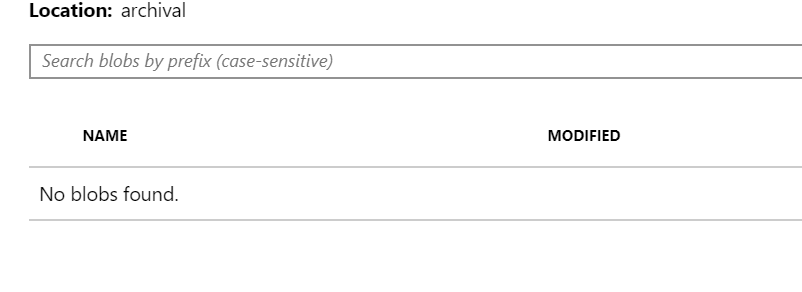

Archival before Move

Source after Move

Archival after Move

Conclusion

Please note that this is a very basic example of moving a single file. However, real-life scenarios aren’t that simplistic, where we have multiple files spanning across multiple folders. We will see a scenario involving multiple files in our next article, which will introduce you to some more activities.

Also read: Azure Data Factory Webhook activity